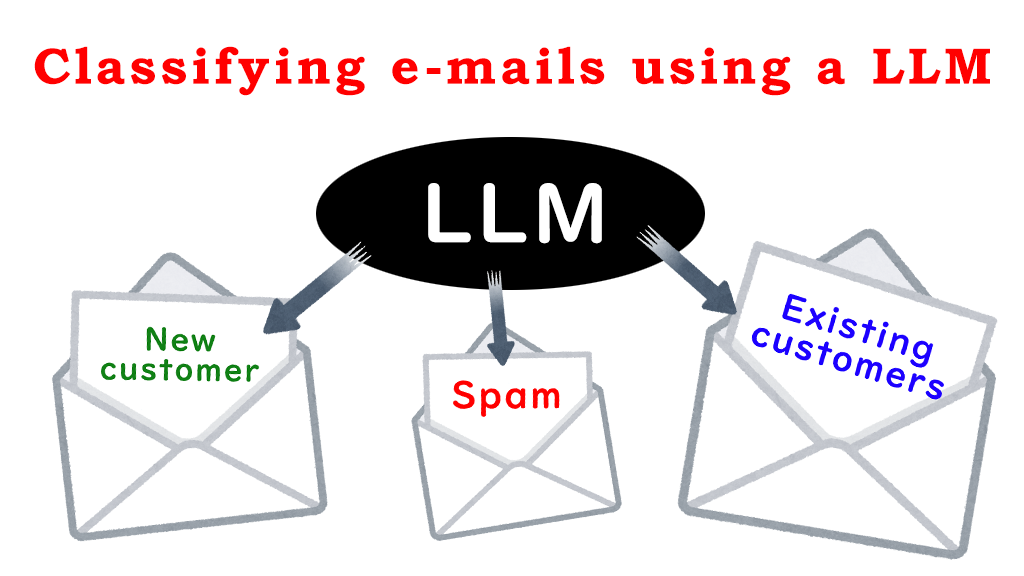

Classifying e-mails using a LLM

2024年12月16日 月曜日

CONTENTS

In our second article in this blog series, we explored content generation and fine-tuning. Using GPT-2, we demonstrated how to generate a fairy tale while highlighting the role of fine-tuning in enhancing accuracy.

In this final article of our LLM series, we focus on prompt engineering—often simply called prompting—and its importance for controlling the output of LLMs. Understanding this approach is crucial for using LLMs effectively for specific use cases without requiring fine-tuning. To illustrate, we introduce LLM text classification through a simple, industry-inspired example.

Why is prompting important?

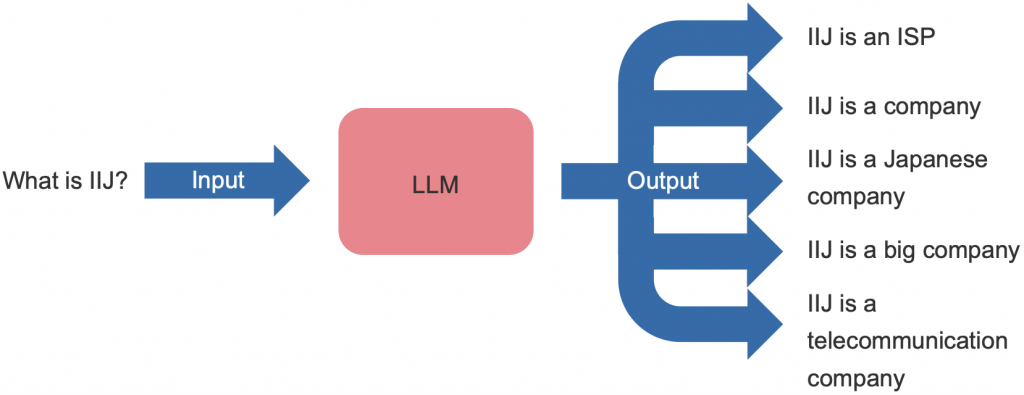

LLMs are stochastic in nature, meaning they can generate different outputs for the same input.

Illustrating how an LLM generates text in a stochastic manner.

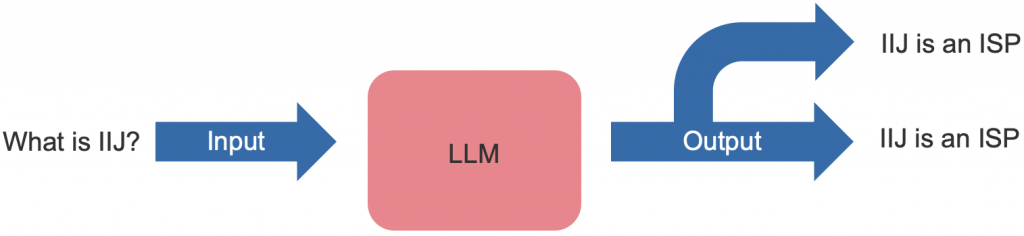

Prompting allows us to control an LLM’s output by providing it with clear and structured instructions. This approach helps that the model to produce consistent outputs for the same input.

Illustrating how an LLM generates text in a deterministic (not stochastic) manner.

This is important because it enables us to instruct LLMs to perform specific tasks, such as text classification, where consistency is essential—ensuring the same class is always returned for a given input. To achieve this consistency, our prompts should contain clear and straightforward instructions.

Prompting can be quite handy when fine-tuning is not feasible due to resource constraints. By carefully crafting prompts, we can limit the scope of the model’s responses and guide it towards specific objectives. Additionally, prompting provides greater control over interactions with the LLM, allowing for customization and reducing irrelevant responses.

How do we prompt LLMs to perform Text classification?

LLMs can do specific tasks when given the right prompt. Here, we explain how to set up a simple prompt for text classification. We demonstrate this with an example of creating an email classifier using Llama 3 8B. Our aim is to create a tool for the customer support team of a company.

We configure the Llama 3 8B to sort emails into three categories:

- Spam: for emails that are not relevant to customer support or the company’s products and services

- Technical Support: for emails about technical issues from customers

- New Customer: for emails from potential customers asking about products and services

By using this system, the customer support team can manage its emails more efficiently and make sure customer questions go to the right place for help.

How difficult is it to create this system? Actually, it is not too difficult. We just need to create a prompt with clear instructions. In our first article, we showed how to use the llama.cpp library to run LLMs on a computer. Here, we use llama.cpp again, but with the Python version.

First, let’s install the llama.cpp library using pip:

pip install llama-cpp-python

After installing the library, we can start by downloading Llama 3 8B from Hugging Face. Llama 3 is one of the state-of-the-art large language models from Meta, and it comes in two versions: one with 8 billion parameters and another with 70 billion parameters. For our use case, we use the 8 billion parameters version because it is lighter and can run on most computers.

wget https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/resolve/main/meta-llama-3-8b-instruct.Q5_K_M.gguf

Once we have the model downloaded, we can initialize it using llama.cpp in Python. We set the n_gpu_layers parameter to -1 to make sure it runs on the GPU.

from llama_cpp import Llama llm = Llama( model_path="meta-llama-3-8b-instruct.Q5_K_M.gguf", n_gpu_layers=-1 )

Next, we proceed to setting up the prompt template. So, let’s pause here for a moment and explain what the prompt template is. The prompt template, as the name suggests, is a template for the prompt that we will provide as input to the LLM. Each LLM has a unique prompt template. This template is defined by the developer of the LLM and it provides instructions for the model to distinguish between the system’s instructions and task from the user’s input.

In the system’s instructions and task section, we define the task that we want the LLM to perform along with the corresponding instructions. In the user’s input section, we define the input for the task that we want to perform. It is crucial not to edit the prompt template except for the part that the LLM’s developer specifies, as otherwise, the LLM may not be able to perform the task accurately.

prompt_template = """

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

{system_prompt}<|eot_id|><|start_header_id|>user<|end_header_id|>

{user_message}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

"""

After defining the prompt template, we continue with the system’s instructions and task section. We define the task and instructions for the email classifier as follows.

system_prompt = """ Task: Categorize the text into the following categories: 1. Spam 2. Technical Support 3. New Customer Instructions: You are an assistant in the customer support team of a networking company. Your company deals with setting up new Internet connections and provides assistance if customers encounter problems connecting to the Internet. Note: Do not include any explanations or apologies in your responses. """

These simple instructions give a clear context to the LLM which helps generating more relevant answers.

Next, we proceed to the user’s input section. In this section, we include exactly the text that the user has provided without making any changes. Then, we append the system’s instructions and tasks from the user’s input section to the system prompt, and parse it to the LLM.

user_message = """

Hello,

The Internet led is red in my router. What should I do?

Thanks

"""

prompt = prompt_template.replace("{system_prompt}", system_prompt).replace("{user_message}", user_message)

output = llm(prompt)["choices"][0]["text"]

print(output)

The LLM classifies the text into the expected category.

Technical Support

We can try another message, one that do not relate to the company’s products or services.

user_message = """

This is a new bicycle in five different colors.

For more information please call to the following number ...

"""

prompt = prompt_template.replace("{system_prompt}", system_prompt).replace("{user_message}", user_message)

output = llm(prompt)["choices"][0]["text"]

print(output)

Again, the LLM accurately classifies the text as spam.

Spam

Finally, let’s look at a message from a potential new customer.

user_message = """

I am interesting to the new offer that you advertise in your website.

Could you please explain me what the offers include?

Thank you!

"""

prompt = prompt_template.replace("{system_prompt}", system_prompt).replace("{user_message}", user_message)

output = llm(prompt)["choices"][0]["text"]

print(output)

And once more, the LLM gets it right.

New Customer

That’s it! We have successfully created an email classifier using an LLM, without needing to write complex code or possess advanced programming skills.

The end

In this final article, we demonstrated how to create an email classifier using an LLM and explained the importance of prompting for guiding LLM behavior. By leveraging the same approach, we can develop applications for a wide range of traditional NLP tasks. For other introductory LLM material, we highly recommend watching the IIJ Research Laboratory seminar video, Generative AI — An Introduction for Beginners.