The era of Large Language Models (LLMs)

2024年06月11日 火曜日

CONTENTS

This article marks the beginning of a three-part series exploring the rising popularity and diverse applications of Large Language Models (LLMs). This series will also provide straightforward tutorials for running LLMs, even for those without prior knowledge of computer science or programming.

The beginning…

Nowadays, Large Language Models (LLMs) have become quite popular worldwide, and every time a new LLM is published by a major AI industry, it becomes the top search term on search engines. Why are LLMs attracting so much attention? It happens because LLMs are now widely used for many different tasks, both commercially and individually. For example, many computer programmers use Code Llama to assist them in developing applications (code generation). In this way, they can generate code and text about code from both code and English prompts.

The power of LLMs

LLMs were developed as tools for helping and accelerating language-related tasks such as organizing documents based on common themes (text classification), automating mail replies (content generation), filtering posts to exclude harmful content (toxicity classification), and much more. So, they can automate various tasks for enterprises, helping them minimize expenses, maximize productivity, and provide innovative and improved services to their clients.

To better understand the power of LLMs, the following example demonstrates a real use case from Korea Telecom (KT), in which LLMs assist a customer support team. With a high demand for customer support, KT receives a lot of inquiries from its customers. Reading and classifying these inquiries takes a lot of time and requires a huge amount of human resources. By developing a LLM to aid in handling the large volume of inquiries from its customers, customer support automates the replying process and also extracts valuable customer insights that the company could use for its product campaign.

Running LLMs is simple!

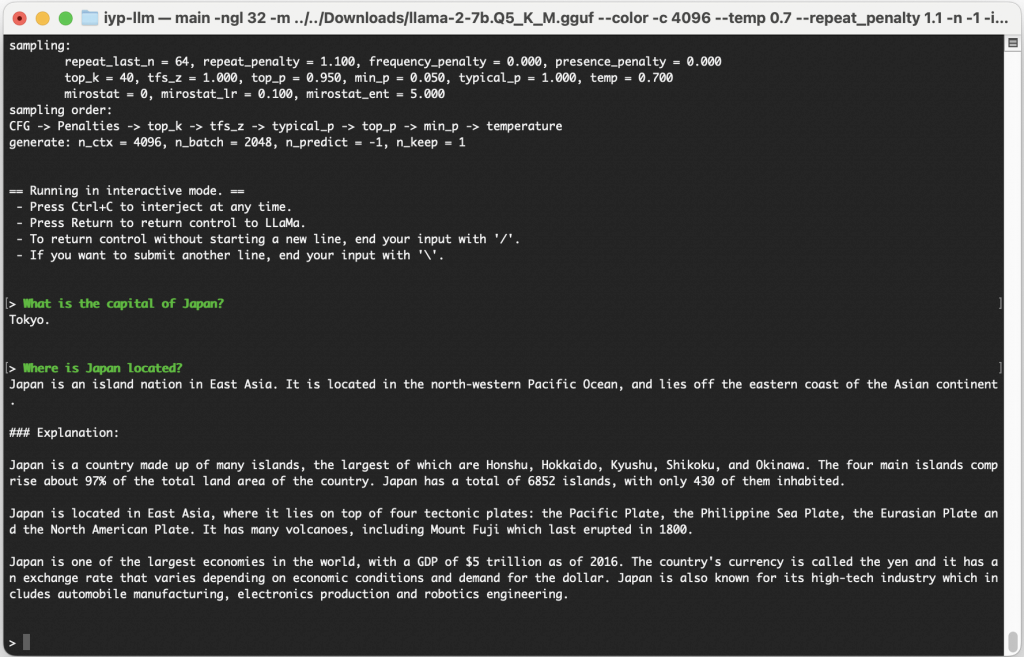

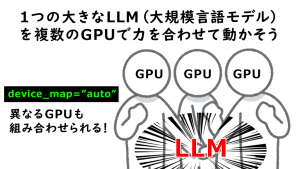

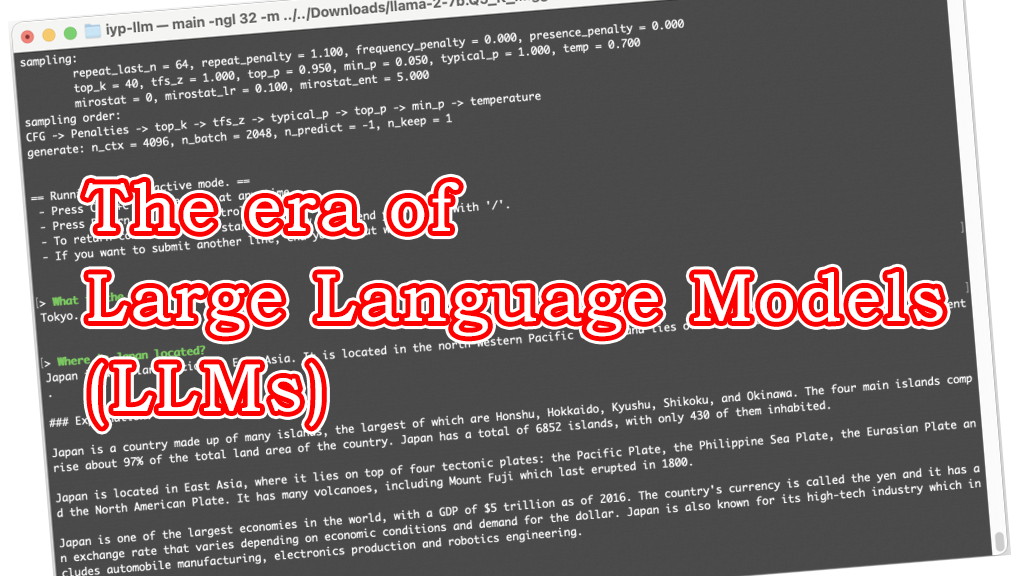

Despite their complexity, there are now a few good specialized libraries for deep learning models that can compress the AI model and make it able to run even on consumer computers. For example, Llama 2 7B runs on a laptop with 16GB of memory (if you want to learn more about the Llama 2 7B, check out the paper “Llama 2: Open Foundation and Fine-Tuned Chat Models“). One library that can be used for running LLMs is llama.cpp. As the suffix betrays, this library is written in C++ in order to utilize all the maximum capabilities of the hardware. Below, we will show how one can run a Llama 2 7B and start chatting with it!

Step 1

First, we need to download the Llama 2 7B model using the following command:

wget https://huggingface.co/TheBloke/Llama-2-7B-GGUF/resolve/main/llama-2-7b.Q5_K_M.gguf

While we are waiting for the model to be downloaded, let’s have a brief of that model without going into much detail about the architecture and how the model actually works. In this example, we use it as a black box!

On July 18, 2023, the Llama 2 model was announced by Meta. This model is open source and can be used for free for both research and commercial use. It officially comes in three model sizes: 7, 13, and 70 billion parameters, has 2 trillion pre-trained tokens, and has been fine-tuned for chat use cases. Although officially it has only a few variations, someone can easily find different ones from the community for free.

Observing the URL we used to download the model, we notice that the provider is not Meta but Hugging Face. Why? Hugging Face is a major community website, like GitHub, for sharing pre-trained deep learning models (if you want to learn more about Hugging Face, check out the article “Hugging Face Pipelineを使ったお手軽AIプログラミング“). The latest LLMs from Google, Meta, Microsoft, IBM, etc., along with the corresponding instructions for running them are available there.

Step 2

Once the model has been downloaded, we proceed with the second step of downloading the necessary library for running the LLM. As mentioned in the beginning, there are few good libraries for running LLMs, but for this demonstration, we use the llama.cpp library (it’s quite popular among the community!). This library can be used on macOS, Linux, and Windows, but each platform has different instructions for compiling the library. To continue our article, we assume that we use macOS or Linux (for Windows, please refer to the corresponding section in the library’s documentation). To download and compile the library, we use the following command:

wget https://github.com/ggerganov/llama.cpp/archive/refs/tags/b2589.zip && unzip b2589.zip && rm b2589.zip && make -C llama.cpp-b2589

Step 3

The final step is to run the LLM model and start interacting with it. With the following command, we are able to do it:

./llama.cpp-b2589/main -ngl 32 -m llama-2-7b.Q5_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -i -ins

That’s it! Now, we can start chatting with the LLM!

While chatting with Llama 2 7B, we may notice that it sometimes makes mistakes, even for simple questions. This is completely normal, as the specific model we chose for this example contains only 7 billion parameters (which is relatively small compared to other state-of-the-art models, but it can run with low computer resources). Therefore, it is reasonable to expect some mistakes.

A new era has started

LLMs are the new big thing in the AI industry, and they provide a lot of advantages. However, there are also quite a few challenges to this new state-of-the-art technology. First of all, creating or fine-tuning LLMs requires a lot of time and computer resources. Second, utilizing an already pre-trained LLM may not achieve high accuracy in specialized tasks due to the limited amount of knowledge for that task. But remember that this new technology was introduced for the first time in 2018 and in six years has achieved great results, so we should be part of this new era!

In the next article, we will go through content generation and fine-tuning using a simple example of generating Japanese fairy tales. Stay tuned!